Parallelization with Dask#

This notebook includes a series of experiments to make multiple samples in parallel using Dask. Many of the simulations carries for this work where carried in parallel and the setup was configures using Dask. We include here the code that was used to create this simulations.

import numpy as np

import ipyparallel as ipp

import itertools

from distributed import progress

import pandas as pd

from typing import NamedTuple

import smpsite as smp

1. Dask Setup#

In this case we decided to work with a total of 60 workers, each one of them carrying a different simulation for some given parameters.

number_of_clusters = 60

rc = ipp.Cluster(n=number_of_clusters).start_and_connect_sync()

dask_client = rc.become_dask()

dask_client

Client

Client-21b728d6-fcba-11ed-82dc-d2fa4b15a597

| Connection method: Direct | |

| Dashboard: /user/facusapienza21/ODINN/proxy/8787/status |

Scheduler Info

Scheduler

Scheduler-e27d3a3e-8311-4436-ba30-e816174b04cd

| Comm: tcp://192.168.11.89:39609 | Workers: 22 |

| Dashboard: /user/facusapienza21/ODINN/proxy/8787/status | Total threads: 22 |

| Started: Just now | Total memory: 84.69 GiB |

Workers

Worker: tcp://192.168.11.89:32971

| Comm: tcp://192.168.11.89:32971 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/34057/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-g91icw92 | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 92.81 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Worker: tcp://192.168.11.89:33663

| Comm: tcp://192.168.11.89:33663 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/33183/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-a0_3aus_ | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 92.74 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Worker: tcp://192.168.11.89:34421

| Comm: tcp://192.168.11.89:34421 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/41711/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-s3jaagfc | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 92.74 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Worker: tcp://192.168.11.89:34799

| Comm: tcp://192.168.11.89:34799 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/39433/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-_9rkw7b7 | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 93.05 MiB | Spilled bytes: 0 B |

| Read bytes: 5.48 MiB | Write bytes: 5.48 MiB |

Worker: tcp://192.168.11.89:34947

| Comm: tcp://192.168.11.89:34947 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/42849/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-vkp3b4ph | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 92.95 MiB | Spilled bytes: 0 B |

| Read bytes: 410.79 kiB | Write bytes: 410.79 kiB |

Worker: tcp://192.168.11.89:35151

| Comm: tcp://192.168.11.89:35151 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/44453/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-akf6mndv | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 92.96 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Worker: tcp://192.168.11.89:35363

| Comm: tcp://192.168.11.89:35363 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/40769/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-to11mdf1 | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 92.86 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Worker: tcp://192.168.11.89:35789

| Comm: tcp://192.168.11.89:35789 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/41069/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-u79ooj2x | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 93.09 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Worker: tcp://192.168.11.89:36781

| Comm: tcp://192.168.11.89:36781 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/34589/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-oqcanrne | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 92.80 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Worker: tcp://192.168.11.89:37179

| Comm: tcp://192.168.11.89:37179 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/38027/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-xplwa8zq | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 92.95 MiB | Spilled bytes: 0 B |

| Read bytes: 8.22 MiB | Write bytes: 8.22 MiB |

Worker: tcp://192.168.11.89:38531

| Comm: tcp://192.168.11.89:38531 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/35419/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-kzp1oqti | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 92.75 MiB | Spilled bytes: 0 B |

| Read bytes: 14.48 MiB | Write bytes: 14.48 MiB |

Worker: tcp://192.168.11.89:39371

| Comm: tcp://192.168.11.89:39371 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/35855/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-9hjdj4e8 | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 93.03 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Worker: tcp://192.168.11.89:40345

| Comm: tcp://192.168.11.89:40345 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/33831/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-oggwcryh | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 92.89 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Worker: tcp://192.168.11.89:41191

| Comm: tcp://192.168.11.89:41191 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/38937/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-npbnb306 | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 93.01 MiB | Spilled bytes: 0 B |

| Read bytes: 7.60 MiB | Write bytes: 7.60 MiB |

Worker: tcp://192.168.11.89:43191

| Comm: tcp://192.168.11.89:43191 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/44261/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-baetmu0n | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 93.04 MiB | Spilled bytes: 0 B |

| Read bytes: 6.72 MiB | Write bytes: 6.72 MiB |

Worker: tcp://192.168.11.89:44271

| Comm: tcp://192.168.11.89:44271 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/38119/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-cquxu82j | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 92.93 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Worker: tcp://192.168.11.89:45027

| Comm: tcp://192.168.11.89:45027 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/40747/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-mkmd29e0 | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 93.07 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Worker: tcp://192.168.11.89:45439

| Comm: tcp://192.168.11.89:45439 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/33641/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-tkhsi69v | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 93.10 MiB | Spilled bytes: 0 B |

| Read bytes: 2.83 MiB | Write bytes: 2.83 MiB |

Worker: tcp://192.168.11.89:45539

| Comm: tcp://192.168.11.89:45539 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/36955/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-vm8i06mt | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 92.93 MiB | Spilled bytes: 0 B |

| Read bytes: 500.72 kiB | Write bytes: 500.72 kiB |

Worker: tcp://192.168.11.89:45855

| Comm: tcp://192.168.11.89:45855 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/45425/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-oc0mp1rb | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 92.96 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Worker: tcp://192.168.11.89:46187

| Comm: tcp://192.168.11.89:46187 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/42057/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-85jmo9it | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 92.94 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Worker: tcp://192.168.11.89:46831

| Comm: tcp://192.168.11.89:46831 | Total threads: 1 |

| Dashboard: /user/facusapienza21/ODINN/proxy/40015/status | Memory: 3.85 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-worker-space/worker-npna3w1f | |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 93.04 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Check to see if the threads are ready

dview = rc[:]

len(dview)

60

2. Macro function definition#

We now define a function that is going to execute all the simulation with the desired parameters. In order to this to work in parallel, the class Params needs to be redefined inside the function. We further add a hash code to the simulation to keep track of them.

def ipp_simulate_estimations(N,

n0,

kappa_within_site,

site_lat,

site_long,

outlier_rate,

secular_method,

kappa_secular,

ignore_outliers,

seed,

n_sim):

class Params(NamedTuple):

"""

Macro to encapsulate all the parameters in the sampling model.

"""

# Number of sites

N : int

# Number of samples per site

n0 : int

# Concentration parameter within site

kappa_within_site : float

# Latitude and longitude of site

site_lat : float

site_long : float

# Proportion of outliers to be sampled from uniform distribution

outlier_rate : float

# Method to sample secular variation. Options are ("tk03", "G", "Fisher")

secular_method : str

kappa_secular : float # Just needed for Fisher sampler

params = Params(N=N,

n0=n0,

kappa_within_site=kappa_within_site,

site_lat=site_lat,

site_long=site_long,

outlier_rate=outlier_rate,

secular_method=secular_method,

kappa_secular=kappa_secular)

# Create all samples

df_tot = smp.simulate_estimations(params,

n_iters=n_sim,

ignore_outliers=ignore_outliers,

seed=seed)

simulation_hash = hash((N, n0, kappa_within_site, site_lat, site_long, outlier_rate, secular_method, kappa_secular))

df_tot["hash"] = simulation_hash

return df_tot

3. Parameter space exploration#

We now set the different parameter choices used for the different simulations.

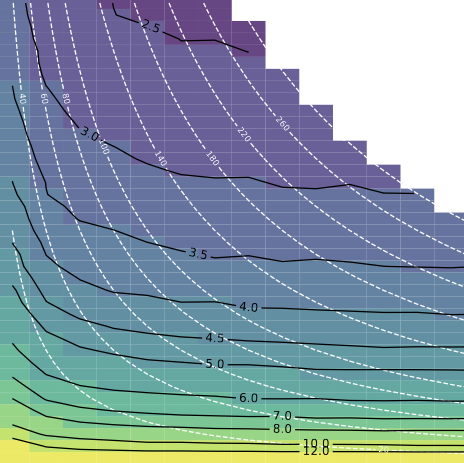

3.1. Figure 1 - Heatmmap with no outliers#

min_n, max_n = 1, 300

n_simulations = 10000

N_max = 100

n0_max = 20

params_iter = {'N': np.arange(1, N_max+1, 1),

'n0': np.arange(1, n0_max+1, 1),

'kappa_within_site': 50,

'site_lat': [30.0],

'site_long': [0.0],

'outlier_rate': [0.0],

'secular_method': ["G"],

'kappa_secular': [np.nan],

'ignore_outliers': ["False"]}

output_file_name = "fig1a_bis_{}sim".format(n_simulations)

params_iter_mesh = np.meshgrid(*[params_iter[key] for key in params_iter.keys()])

for i, key in enumerate(params_iter.keys()):

params_iter[key] = params_iter_mesh[i].ravel()

all_n_tot = params_iter['N'] * params_iter['n0']

valid_index = (min_n <= all_n_tot) & (all_n_tot <= max_n)

n_tasks = np.sum(valid_index)

print("Total number of simulations: ", n_tasks)

indices = np.arange(n_tasks)

np.random.shuffle(indices)

for key in params_iter.keys():

params_iter[key] = params_iter[key][valid_index]

# Shuffle

params_iter[key] = params_iter[key][indices]

params_iter["seed"] = np.random.randint(0, 2**32-1, n_tasks)

params_iter["n_sim"] = np.repeat(n_simulations, n_tasks)

Total number of simulations: 824

3.2. Figure 3e and 3f - Intersection of errors#

min_n, max_n = 100, 100

n_simulations = 1000

params_iter = {'N': [100, 20],

'n0': [5, 1],

'kappa_within_site': np.arange(10, 101, 10),

'site_lat': np.arange(0, 81, 10),

'site_long': [0.0],

'outlier_rate': np.arange(0.30, 0.70, .04),

'secular_method': ["G"],

'kappa_secular': [np.nan],

'ignore_outliers': [None]}

params_iter_mesh = np.meshgrid(*[params_iter[key] for key in params_iter.keys()])

for i, key in enumerate(params_iter.keys()):

params_iter[key] = params_iter_mesh[i].ravel()

all_n_tot = params_iter['N'] * params_iter['n0']

valid_index = (min_n <= all_n_tot) & (all_n_tot <= max_n)

n_tasks = np.sum(valid_index)

print("Total number of simulations: ", n_tasks)

indices = np.arange(n_tasks)

np.random.shuffle(indices)

for key in params_iter.keys():

params_iter[key] = params_iter[key][valid_index]

# Shuffle

params_iter[key] = params_iter[key][indices]

params_iter["seed"] = np.random.randint(0, 2**32-1, n_tasks)

params_iter['ignore_outliers'] = np.where(np.array(params_iter['n0']) == 5, "True", "vandamme")

params_iter["n_sim"] = np.repeat(n_simulations, n_tasks)

output_file_name = "fig3e_{}sim".format(n_simulations)

Total number of simulations: 1800

3.3. Figure 2 - Function of the total number of samples \(n\)#

min_n, max_n = 1, 300

n_simulations = 2000

params_iter = {'N': np.arange(min_n, max_n, 1),

'n0': np.arange(1, 8, 1),

'kappa_within_site': 50,

'site_lat': [30.0],

'site_long': [0.0],

'outlier_rate': [0.0],

'secular_method': ["G"],

'kappa_secular': [np.nan],

'ignore_outliers': ["False"]}

output_file_name = "fig2ab_{}sim".format(n_simulations)

params_iter_mesh = np.meshgrid(*[params_iter[key] for key in params_iter.keys()])

for i, key in enumerate(params_iter.keys()):

params_iter[key] = params_iter_mesh[i].ravel()

all_n_tot = params_iter['N'] * params_iter['n0']

valid_index = (min_n <= all_n_tot) & (all_n_tot <= max_n) #& (all_n_tot % 5 == 0)

n_tasks = np.sum(valid_index)

print("Total number of simulations: ", n_tasks)

indices = np.arange(n_tasks)

np.random.shuffle(indices)

for key in params_iter.keys():

params_iter[key] = params_iter[key][valid_index]

# Shuffle

params_iter[key] = params_iter[key][indices]

params_iter["seed"] = np.random.randint(0, 2**32-1, n_tasks)

params_iter["n_sim"] = np.repeat(n_simulations, n_tasks)

Total number of simulations: 776

3.4. Figure 2 - Function of the total number of sites \(N\)#

min_n, max_n = 1, 3000

n_simulations = 2000

params_iter = {'N': np.insert(np.arange(2, 101, 2), 0, 1),

'n0': np.arange(1, 8, 1),

'kappa_within_site': 50,

'site_lat': [30.0],

'site_long': [0.0],

'outlier_rate': [0.0],

'secular_method': ["G"],

'kappa_secular': [np.nan],

'ignore_outliers': ["False"]}

output_file_name = "fig2cd_{}sim".format(n_simulations)

params_iter_mesh = np.meshgrid(*[params_iter[key] for key in params_iter.keys()])

for i, key in enumerate(params_iter.keys()):

params_iter[key] = params_iter_mesh[i].ravel()

all_n_tot = params_iter['N'] * params_iter['n0']

valid_index = (min_n <= all_n_tot) & (all_n_tot <= max_n) #& (all_n_tot % 5 == 0)

n_tasks = np.sum(valid_index)

print("Total number of simulations: ", n_tasks)

indices = np.arange(n_tasks)

np.random.shuffle(indices)

for key in params_iter.keys():

params_iter[key] = params_iter[key][valid_index]

# Shuffle

params_iter[key] = params_iter[key][indices]

params_iter["seed"] = np.random.randint(0, 2**32-1, n_tasks)

params_iter["n_sim"] = np.repeat(n_simulations, n_tasks)

Total number of simulations: 357

Figure 3 - Intersection#

min_n, max_n = 100, 100

n_simulations = 5000

params_iter = {'N': [100, 20],

'n0': [1, 5],

'kappa_within_site': 50,

'site_lat': [30.0],

'site_long': [0.0],

'outlier_rate': np.arange(0, 0.61, 0.02),

'secular_method': ["G"],

'kappa_secular': [np.nan],

'ignore_outliers': ["True", "False", "vandamme"]}

output_file_name = "fig3c_{}sim".format(n_simulations)

params_iter_mesh = np.meshgrid(*[params_iter[key] for key in params_iter.keys()])

for i, key in enumerate(params_iter.keys()):

params_iter[key] = params_iter_mesh[i].ravel()

all_n_tot = params_iter['N'] * params_iter['n0']

valid_index = (min_n <= all_n_tot) & (all_n_tot <= max_n)

n_tasks = np.sum(valid_index)

print("Total number of simulations: ", n_tasks)

indices = np.arange(n_tasks)

np.random.shuffle(indices)

for key in params_iter.keys():

params_iter[key] = params_iter[key][valid_index]

# Shuffle

params_iter[key] = params_iter[key][indices]

# params_iter["ignore_outliers"] = params_iter["n0"] == 5

params_iter["seed"] = np.random.randint(0, 2**32-1, n_tasks)

params_iter["n_sim"] = np.repeat(n_simulations, n_tasks)

Total number of simulations: 186

Figure 4 - Boxplot#

min_n, max_n = 96, 104

n_simulations = 5000

params_iter = {'N': [100, 50, 33, 25, 20, 17, 14],

'n0': [1, 2, 3, 4, 5, 6, 7],

'kappa_within_site': 50,

'site_lat': [30.0],

'site_long': [0.0],

'outlier_rate': [0.0, 0.10, 0.20, 0.40, 0.60], # np.arange(0, 0.61, 0.05), # Just select a few outliers values for the plot.

'secular_method': ["G"],

'kappa_secular': [np.nan],

'ignore_outliers': ["False", "True", "vandamme"]}

params_iter_mesh = np.meshgrid(*[params_iter[key] for key in params_iter.keys()])

for i, key in enumerate(params_iter.keys()):

params_iter[key] = params_iter_mesh[i].ravel()

all_n_tot = params_iter['N'] * params_iter['n0']

valid_index = (min_n <= all_n_tot) & (all_n_tot <= max_n)

n_tasks = np.sum(valid_index)

print("Total number of simulations: ", n_tasks)

indices = np.arange(n_tasks)

np.random.shuffle(indices)

for key in params_iter.keys():

params_iter[key] = params_iter[key][valid_index]

# Shuffle

params_iter[key] = params_iter[key][indices]

params_iter["seed"] = np.random.randint(0, 2**32-1, n_tasks)

params_iter["n_sim"] = np.repeat(n_simulations, n_tasks)

output_file_name = "fig4_{}sim".format(n_simulations)

Total number of simulations: 105

4. Run Simulation#

We finally set and run all the simulations.

task = dask_client.map(ipp_simulate_estimations,

params_iter['N'],

params_iter['n0'],

params_iter['kappa_within_site'],

params_iter['site_lat'],

params_iter['site_long'],

params_iter['outlier_rate'],

params_iter['secular_method'],

params_iter['kappa_secular'],

params_iter['ignore_outliers'],

params_iter['seed'],

params_iter["n_sim"])

res = dask_client.submit(pd.concat, task)

progress(res)

5. Save outputs#

%%time

df_all = res.result()

df_all

df_all.to_csv('../outputs/' + output_file_name + '_total.csv')

# Summary table

df_summary = df_all.groupby("hash", as_index=False).apply(smp.summary_simulations)

df_summary

df_summary.to_csv('../outputs/' + output_file_name + '_summary.csv')